This is a write-up of a talk I gave at BrooklynJS on 12/18/2014,

titled “Build apps, not infrastructure”.

Follow along with the slides ▾

Use arrow keys or swipe to control slides | Open slides in a new window

While most of us love building apps, we’d also love it if people used them, and if we’re lucky, lots of people. Problem is, then we really need to care about how our app runs and scales, and it’s best to get it figured out before that moment of fame arrives. Let’s look at a few of the tools and services that you can use to manage your infrastructure, so you can spend more time building your apps, and less dealing with keeping your app running.

Yes, we all have changed code directly in production (and you’re lying if you say you haven’t). We do this despite knowning that it’s horrible:

- How many times have you found someone changed production directly and forgot to check it in?

- Or made [what you thought was] a simple code change to fix a specific problem, but instead it revealed further problems.

- Or users seeing intermittent brokenness while you make changes.

- Or how do you know it works if you haven’t written tests for it?

- Or! OR!

Heck, a company even has the person editing code in production don a pink sombrero to bring awareness to “Cowboy Coding”:

Our reasons for making changes directly in production are usually good. Sometimes they’re not. Regardless, we need to do this because our deployment processes aren’t automated enough to safely do this.

Think about it - if we could quickly write a fix in a clone of production, test it, and push it to production in seconds, wouldn’t we do that instead?

It’s a lot of work to fully automate deployments, so most people have a fairly manual deployment process. Which is crazy, considering we’re programmers - all we do is automate.

This happens mainly because we prioritize features, or enhancements to our applications, over chores, which can be enhancements to our infrastructure. This distinction comes from Scrum, where you’re rewarded for completing features, as those “add business value”…

Complete and utter bullshit…

A better infrastructure makes it easier to ship features. A shitty/non-existant infrastructure makes adding features exponentially harder as the system gets more complicated. Therefore, infrastructure improvements are really just features as well - as they do provide end-user value, eventually.

For example, most people would consider “User Signup” to be a feature, as a user interacts with it directly. Though, at the same time, it’s more part of your infrastructure, as it’s not the product itself, but a necessary evil to use the product. But, you’d never make signup be a manual process, as that would mean no one would sign up and you’d have no users.

Same should go for deployments - if it’s not automatic, you’ll be afraid of deploying, and favor large deployments, which will create more bugs, and a slew of other anti-patterns will become part of your process. To build a great product, you need to have an equally great infrastructure. Your users will feel the pain when your infrastructure doesn’t support your ability to enhance your product.

Whoa, far out man…

So, what would we want this infrastructure-as-a-product to do for us?

-

Runs the Twelve-Factor App

This methology of building web applications and services leads to more maintainable and scalable products. We’ll want our infrastructure to run these apps, as these are the apps we’ll write. Read more about 12-factor apps.

-

Deploy a new app

New apps should be able to be deployed to any environment within minutes. Given that we prefer micro-services over more monolithic architectures, this is an important feature - new server provisioning will happen very often. Infrastructures which requires significant effort to provision new servers tend to avoid this patter, leading to an application which is harder to scale and evolve.

-

Automated scaling

Every web app or service should be behind its own load-balancer, which you can easily add or remove individual servers to. The creation of these servers should be fully-automated to allow the load-balancer to create more instance for you.

-

Deploying should not introduce downtime

Deploying an existing app or service shouldn’t make itself unavailable during deployment. It should also allow for rollback, in case a new deployment isn’t functioning properly, and versioning, to run multiple versions of the same app.

-

Create and clone environments

Environments, a collection of all servers and configuration, should be easily created or cloned. This environment can be anywhere - in the cloud, or locally to develop against.

-

Everything is automated

Minimal manual processes should be involving in doing any of the above features. Any modifications made to a default environment should be repeatable.

Sounds awesome, right? Does it already exist? Does it kind of already exist? Let’s see what tools and services already exist that could help us out:

Heroku is “the” platform-as-a-service. It popularized many of the

12-factor app practices. It was the first to use git push to trigger deployments.

Most importantly, it provides developers with a clean abstraction of an app,

hiding the complexities how to run the app. While Heroku’s featureset is almost

exactly what we want, this abstraction comes at a price - quite literally.

Heroku is fairly expensive ($34.50/month for the low-end 2-server setup). It becomes more

expensive as you use add-ons (for example, $50/month for low-end Postgres).

Also, using Heroku is much more difficult (and expensive) to use for a utility

server, like Jenkins or Graphite, which may have higher CPU requirements

(nothing worse than slow builds).

That’s not to say if you’re using Heroku you shouldn’t, it’s a great service. Just be aware of the pros and cons.

Though, how Heroku deploys applications is very interesting. Heroku has a concept of a buildpack, which contains the logic to detect a specific type of application and deploy it. Heroku Buildpacks are all open-source, meaning you can write your own, allowing Heroku to run almost anything. We can also use Heroku Buildpacks to provision our own servers…

Docker provides application containers, which provides the applications with an isolated view of the operating system - their own process ID space, filesystem, and network interfaces. It also provides contraints on system resources (memory, CPU, network I/O).

Essentially Docker can be used to provision and deploy applications, as an alternative to Heroku Buildpacks. The difference is Docker can be used to run an identical environment locally as well as when deployed, and can run other apps like databases.

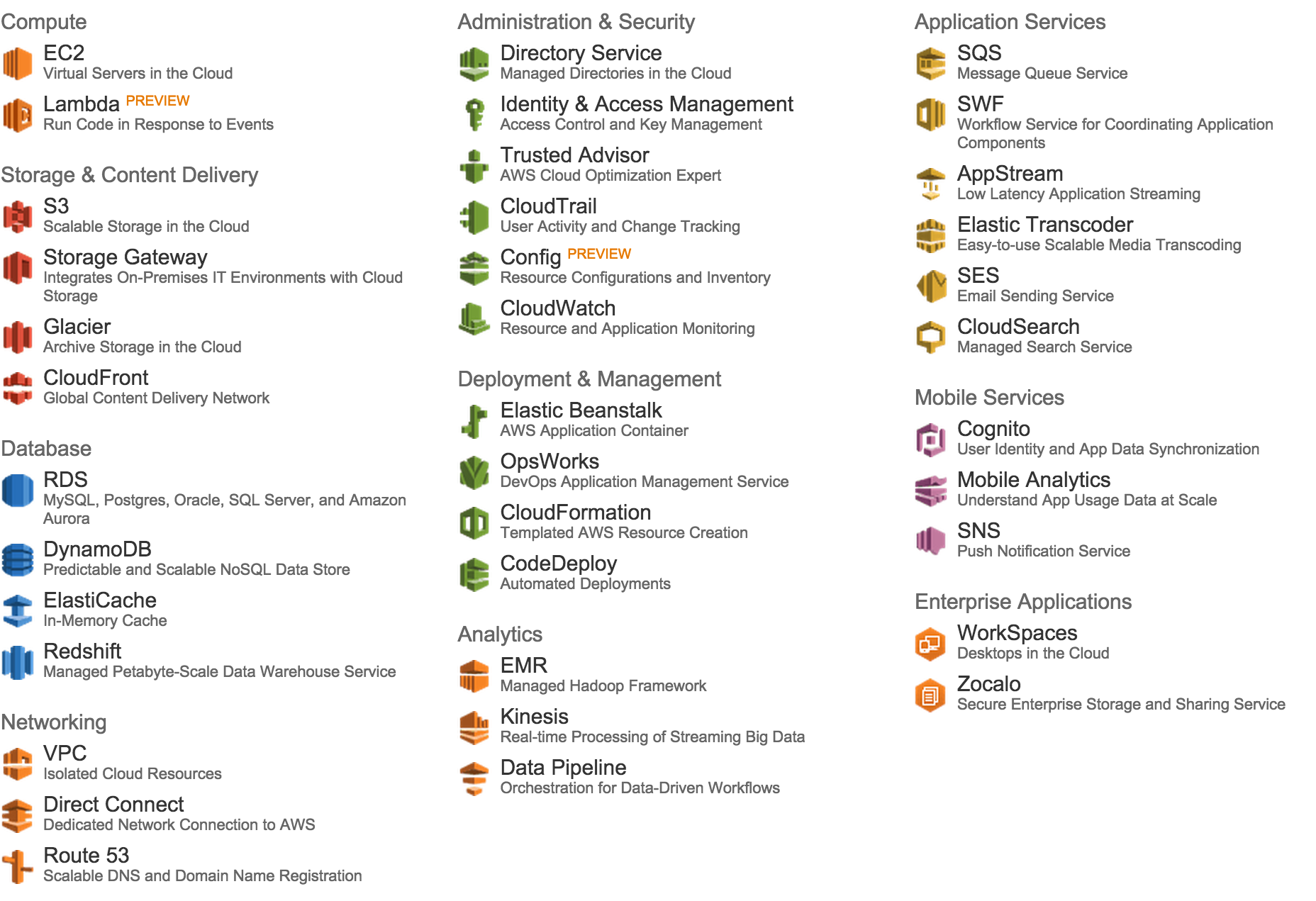

Amazon Web Services (AWS) is a massive collection of services. 37 different services to be exact:

At the core is Elastic Compute Cloud (EC2), which allows you to run and manage arbitrary virtual machines in Amazon’s data centers. EC2 also has sub-services to help route network traffic to your VMs, specifically a TCP and HTTP load-balancer, as well as Auto Scaling, which helps you launch instances and add them to a load-balancer.

Another useful service is Simple Storage Service (S3), which stores arbitrary data like a file system. It can also serve those files over HTTP, making it an ideal static HTTP server, as you don’t have to manage servers or load-balancers.

The 35 other services do lots of useful things, like various data storage services, private networks, DNS, app and mobile services, deployment/provisioning stuff, oh my! It can get very confusing for newcomers and experienced engineer alike, as some of the services overlap in functionality. For example, OpsWorks, CloudFormation, and Beanstalk all can do very similar things. Or complete with existing Open Source offerings, like DynamoDB vs. MongoDB.

Elastic Beanstalk is worth noting, because it provides a similar

feature-set to Heroku - it can deploy applications to EC2 instances, and

auto-configures auto scaling and load balancing. However, the pre-configured

environments are awkward to configure, using the special .elasticbeanstalk

and .ebextensions directories, and creating a

custom AMI for Elastic Beanstalk somewhat violates

the “infrastructure as code” tenant. Also, it’s all or nothing - so if

your application isn’t supported by Elastic Beanstalk, like

GoLang, you can’t use it.

Recently, Amazon improved Elastic Beanstalk by adding support for Docker, which can be used to avoid all the environment weirdnesses, standardize provisioning, and support other languages. It also supports rolling updates, meaning there’s no downtime during a deployment.

Amazon has recently added some new services, currently in preview, which are promising:

- CodeDeploy manages applications and how to deploy them (some overlap with Beanstalk, but a bit more general)

- EC2 Container Service supports clustering of Docker containers

- Lambda - respond to events with code.

For those completely overwelmed by AWS, or just looking for something simpler, Digital Ocean is basically the equivalent of EC2 and Route53, but with an awesome user interface for both the website and the API, making it much easier to work with.

Because it’s only for running droplets (their word for VMs), any load balancing needs to be done with another dedicated droplet (eg. HAProxy), and scaled manually.

Seems like we can use all this for the major features of our infrastructure, but nothing integrates it all together and makes it as easy as Heroku.

Riker gives you Heroku-like application deployments for Amazon Web Services. It automates all the best-practices of application deployment, so you don’t have to piece things together.

Riker deploys any application with a buildpack to AWS, and automatically configures load balancing and auto scaling for you, as well as manages rolling deployments. It also can deploy static websites to S3.

Next I’d like to make the target “cloud” be extensible (most likely Digital Ocean as a first), support Dockerfiles as an alternative to buildpacks, and use some of AWS’s new features, like the EC2 Container Service, CodeDeploy, and Lambda.

Check out my initial post about Riker, as it’s the same content I spoke about in my talk. Here’s some other useful links:

- Project on GitHub

- Riker on Python Package Index

- Issues list (for roadmap as well as report bugs)

- Pull requests would be much appreciated!

If you want to (eventually) spend less time managing your infrastructure, and more time building products, or are just interested in making infrastructure management better, give Riker a try and come help me build it!

Yeah, I’m a Trekky…